Are You Embarrassed By Your Deepseek Abilities? Here is What To Do

페이지 정보

작성자 Christel 날짜25-02-03 19:32 조회5회 댓글0건본문

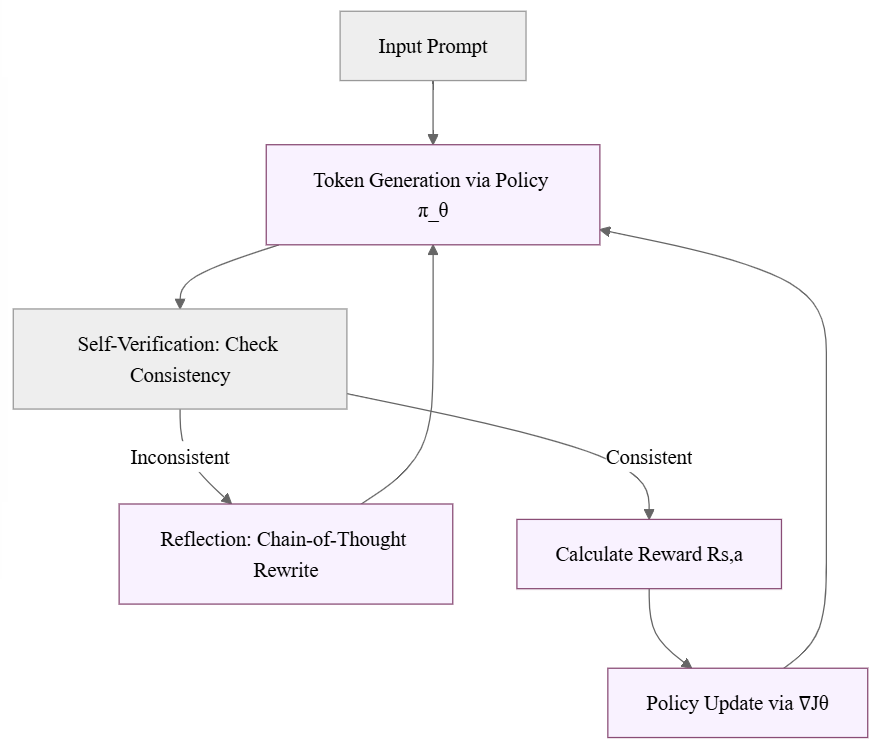

But DeepSeek has called into query that notion, and threatened the aura of invincibility surrounding America’s technology industry. The current established technology of LLMs is to course of input and generate output at the token level. Overall, the process of testing LLMs and determining which of them are the fitting fit in your use case is a multifaceted endeavor that requires cautious consideration of varied elements. Trust me, this may save you pennies and make the method a breeze. With the huge number of out there giant language models (LLMs), embedding fashions, and vector databases, it’s essential to navigate via the choices correctly, as your determination could have essential implications downstream. Now that you've all of the supply paperwork, the vector database, the entire mannequin endpoints, it’s time to build out the pipelines to check them within the LLM Playground. Finally, we introduce HuatuoGPT-o1, a medical LLM able to advanced reasoning, which outperforms general and medical-particular baselines using solely 40K verifiable problems. Experiments present complex reasoning improves medical downside-fixing and benefits extra from RL. To handle this, we suggest verifiable medical problems with a medical verifier to verify the correctness of model outputs. Let’s dive in and see how you can simply arrange endpoints for models, explore and evaluate LLMs, and securely deploy them, all whereas enabling sturdy model monitoring and maintenance capabilities in manufacturing.

But DeepSeek has called into query that notion, and threatened the aura of invincibility surrounding America’s technology industry. The current established technology of LLMs is to course of input and generate output at the token level. Overall, the process of testing LLMs and determining which of them are the fitting fit in your use case is a multifaceted endeavor that requires cautious consideration of varied elements. Trust me, this may save you pennies and make the method a breeze. With the huge number of out there giant language models (LLMs), embedding fashions, and vector databases, it’s essential to navigate via the choices correctly, as your determination could have essential implications downstream. Now that you've all of the supply paperwork, the vector database, the entire mannequin endpoints, it’s time to build out the pipelines to check them within the LLM Playground. Finally, we introduce HuatuoGPT-o1, a medical LLM able to advanced reasoning, which outperforms general and medical-particular baselines using solely 40K verifiable problems. Experiments present complex reasoning improves medical downside-fixing and benefits extra from RL. To handle this, we suggest verifiable medical problems with a medical verifier to verify the correctness of model outputs. Let’s dive in and see how you can simply arrange endpoints for models, explore and evaluate LLMs, and securely deploy them, all whereas enabling sturdy model monitoring and maintenance capabilities in manufacturing.

You possibly can instantly see that the non-RAG mannequin that doesn’t have access to the NVIDIA Financial data vector database gives a different response that can also be incorrect. These explorations are performed using 1.6B parameter fashions and training information within the order of 1.3T tokens. While these distilled fashions typically yield barely decrease performance metrics than the total 671B-parameter model, they stay highly succesful-typically outperforming different open-supply fashions in the identical parameter vary. For instance, a 175 billion parameter model that requires 512 GB - 1 TB of RAM in FP32 could probably be decreased to 256 GB - 512 GB of RAM through the use of FP16. ARG times. Although DualPipe requires preserving two copies of the mannequin parameters, this doesn't significantly increase the reminiscence consumption since we use a big EP dimension during training. We then scale one structure to a model dimension of 7B parameters and training knowledge of about 2.7T tokens. The use case also contains information (in this example, we used an NVIDIA earnings name transcript as the source), the vector database that we created with an embedding mannequin known as from HuggingFace, the LLM Playground where we’ll examine the models, as nicely as the source notebook that runs the entire solution.

Our approach, referred to as MultiPL-T, generates high-quality datasets for low-resource languages, which might then be used to nice-tune any pretrained Code LLM. DeepSeek's relatively current entry into the market, mixed with its open-supply method, has fostered speedy development. DeepSeek's V3 and R1 models took the world by storm this week. He’s focused on bringing advances in data science to users such that they will leverage this worth to solve actual world enterprise problems. Code LLMs produce spectacular results on excessive-useful resource programming languages which are effectively represented of their training data (e.g., Java, Python, or JavaScript), but struggle with low-useful resource languages which have restricted coaching knowledge obtainable (e.g., OCaml, ديب سيك Racket, and several others). Code LLMs are also rising as building blocks for research in programming languages and software program engineering. This paper presents an efficient method for boosting the efficiency of Code LLMs on low-resource languages utilizing semi-artificial knowledge. Confidence in the reliability and security of LLMs in manufacturing is one other critical concern. Only by comprehensively testing fashions in opposition to real-world scenarios, customers can determine potential limitations and areas for improvement before the answer is live in manufacturing.

This permits you to understand whether or not you’re using precise / relevant information in your answer and replace it if obligatory. Within the quick-evolving landscape of generative AI, choosing the proper elements in your AI resolution is important. The Chinese entrepreneur, who established a quantitative hedge fund in 2015 and led it to a massive success, has shaken up the worldwide Artificial Intelligence landscape with his language and reasoning model, DeepSeek-R1. A blog put up that demonstrates the way to high-quality-tune ModernBERT, a brand new state-of-the-artwork encoder mannequin, for classifying person prompts to implement an intelligent LLM router. A strong framework that combines dwell interactions, backend configurations, and thorough monitoring is required to maximise the effectiveness and reliability of generative AI solutions, making certain they deliver correct and relevant responses to consumer queries. Is it free for the end person? Now, if you happen to need an API key you simply scroll right down to API keys, situation a new API key and you may get an entire free deepseek one. Write a code that may solve this math problem: If I get a salary of 1000 euros. What you will discover most is that DeepSeek is restricted by not containing all the extras you get withChatGPT.

Here is more info in regards to ديب سيك look at our own web page.

댓글목록

등록된 댓글이 없습니다.