GitHub - Deepseek-ai/DeepSeek-R1

페이지 정보

작성자 Kia Lefroy 날짜25-02-03 16:25 조회4회 댓글0건본문

Reports in the media and discussions inside the AI neighborhood have raised issues about DeepSeek exhibiting political bias. DeepSeek collects knowledge akin to IP addresses and gadget info, which has raised potential GDPR issues. Just like the scrutiny that led to TikTok bans, worries about data storage in China and potential authorities entry raise purple flags. DeepSeek's deflection when asked about controversial subjects that are censored in China. The issue with DeepSeek's censorship is that it'll make jokes about US presidents Joe Biden and Donald Trump, but it surely will not dare so as to add Chinese President Xi Jinping to the combination. While DeepSeek's performance is impressive, its improvement raises vital discussions about the ethics of AI deployment. Innovations: OpenAI repeatedly updates the mannequin, using consumer feedback and AI advancements to refine its functionality and ensure relevance in several purposes. In December 2024, OpenAI announced a new phenomenon they noticed with their latest mannequin o1: as test time compute increased, the mannequin bought higher at logical reasoning tasks such as math olympiad and competitive coding issues. Also setting it other than different AI tools, the DeepThink (R1) model shows you its actual "thought process" and the time it took to get the reply before supplying you with a detailed reply.

Reports in the media and discussions inside the AI neighborhood have raised issues about DeepSeek exhibiting political bias. DeepSeek collects knowledge akin to IP addresses and gadget info, which has raised potential GDPR issues. Just like the scrutiny that led to TikTok bans, worries about data storage in China and potential authorities entry raise purple flags. DeepSeek's deflection when asked about controversial subjects that are censored in China. The issue with DeepSeek's censorship is that it'll make jokes about US presidents Joe Biden and Donald Trump, but it surely will not dare so as to add Chinese President Xi Jinping to the combination. While DeepSeek's performance is impressive, its improvement raises vital discussions about the ethics of AI deployment. Innovations: OpenAI repeatedly updates the mannequin, using consumer feedback and AI advancements to refine its functionality and ensure relevance in several purposes. In December 2024, OpenAI announced a new phenomenon they noticed with their latest mannequin o1: as test time compute increased, the mannequin bought higher at logical reasoning tasks such as math olympiad and competitive coding issues. Also setting it other than different AI tools, the DeepThink (R1) model shows you its actual "thought process" and the time it took to get the reply before supplying you with a detailed reply.

It completed its coaching with just 2.788 million hours of computing time on powerful H800 GPUs, because of optimized processes and FP8 coaching, which accelerates calculations using less vitality. In contrast, ChatGPT’s expansive training data supports various and inventive tasks, including writing and basic research. DeepSeek is a sophisticated open-supply AI training language mannequin that aims to course of vast amounts of data and generate correct, high-high quality language outputs inside particular domains similar to training, coding, or research. Zero: Memory optimizations towards training trillion parameter fashions. MLA ensures environment friendly inference through significantly compressing the key-Value (KV) cache right into a latent vector, whereas DeepSeekMoE permits coaching strong fashions at an economical cost via sparse computation. Unlike different AI fashions that price billions to prepare, DeepSeek claims they built R1 for much much less, deep seek which has shocked the tech world as a result of it shows you won't need huge quantities of cash to make superior AI. If you happen to want multilingual help for normal purposes, ChatGPT is perhaps a greater alternative. DeepSeek responds quicker in technical and area of interest tasks, while ChatGPT offers better accuracy in handling complex and nuanced queries. No matter which is healthier, we welcome DeepSeek as formidable competitors that’ll spur other AI corporations to innovate and ship higher features to their customers.

It completed its coaching with just 2.788 million hours of computing time on powerful H800 GPUs, because of optimized processes and FP8 coaching, which accelerates calculations using less vitality. In contrast, ChatGPT’s expansive training data supports various and inventive tasks, including writing and basic research. DeepSeek is a sophisticated open-supply AI training language mannequin that aims to course of vast amounts of data and generate correct, high-high quality language outputs inside particular domains similar to training, coding, or research. Zero: Memory optimizations towards training trillion parameter fashions. MLA ensures environment friendly inference through significantly compressing the key-Value (KV) cache right into a latent vector, whereas DeepSeekMoE permits coaching strong fashions at an economical cost via sparse computation. Unlike different AI fashions that price billions to prepare, DeepSeek claims they built R1 for much much less, deep seek which has shocked the tech world as a result of it shows you won't need huge quantities of cash to make superior AI. If you happen to want multilingual help for normal purposes, ChatGPT is perhaps a greater alternative. DeepSeek responds quicker in technical and area of interest tasks, while ChatGPT offers better accuracy in handling complex and nuanced queries. No matter which is healthier, we welcome DeepSeek as formidable competitors that’ll spur other AI corporations to innovate and ship higher features to their customers.

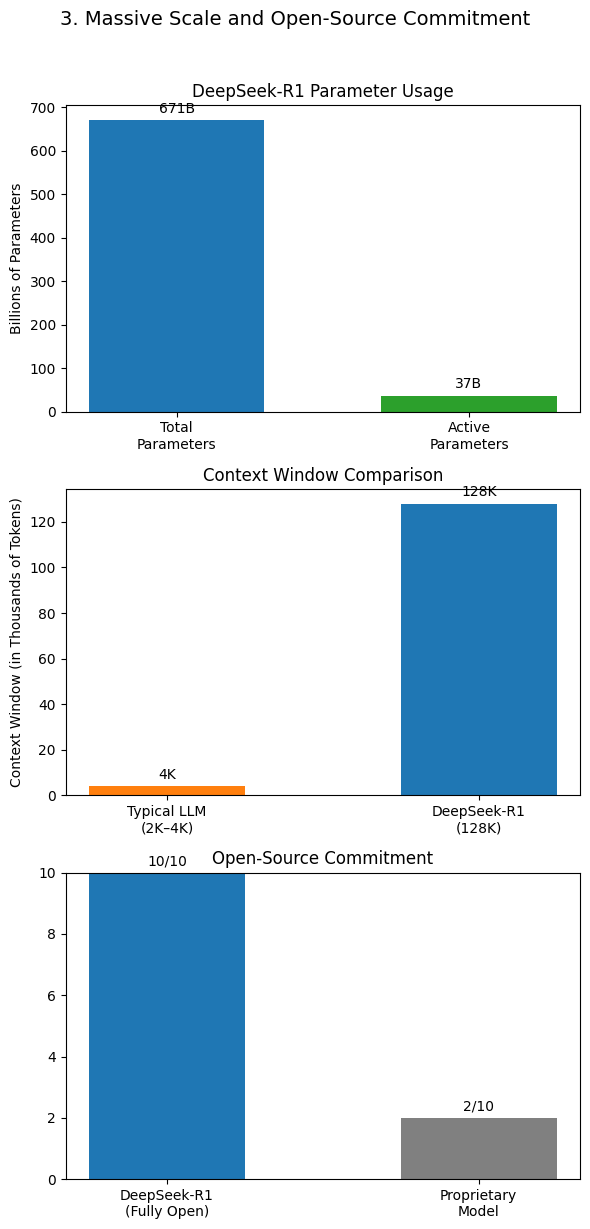

As we've seen in the previous couple of days, its low-value method challenged major gamers like OpenAI and will push corporations like Nvidia to adapt. Newsweek contacted DeepSeek, OpenAI and the U.S.'s Bureau of Industry and Security via e mail for remark. DeepSeek didn't instantly reply to a request for remark. DeepSeek’s specialization vs. ChatGPT’s versatility DeepSeek aims to excel at technical duties like coding and logical downside-solving. DeepSeek’s specialized modules offer precise help for coding and technical research. Using the reasoning knowledge generated by DeepSeek-R1, we fine-tuned several dense models which are broadly used within the research neighborhood. DeepSeek depends heavily on large datasets, sparking data privateness and usage issues. DeepSeek is a Chinese synthetic intelligence company specializing in the development of open-source massive language fashions (LLMs). At the massive scale, we train a baseline MoE mannequin comprising 228.7B complete parameters on 540B tokens. Architecture: The preliminary model, GPT-3, contained roughly 175 billion parameters. Parameters are like the building blocks of AI, serving to it understand and ديب سيك generate language. DeepSeek excels in cost-effectivity, technical precision, and customization, making it ideal for specialised tasks like coding and research.

While they share similarities, they differ in growth, architecture, training data, cost-effectivity, performance, and innovations. Its coaching supposedly costs lower than $6 million - a shockingly low determine when in comparison with the reported $a hundred million spent to train ChatGPT's 4o model. Training knowledge: ChatGPT was skilled on a wide-ranging dataset, together with textual content from the Internet, books, and Wikipedia. DeepSeek-V3 is accessible throughout a number of platforms, together with web, mobile apps, and APIs, catering to a wide range of users. As DeepSeek-V2, DeepSeek-V3 additionally employs additional RMSNorm layers after the compressed latent vectors, and multiplies additional scaling components on the width bottlenecks. To be specific, throughout MMA (Matrix Multiply-Accumulate) execution on Tensor Cores, intermediate outcomes are accumulated utilizing the limited bit width. So it is typing into YouTube now and then it's trying by means of the outcomes. Performance: deepseek ai produces outcomes similar to some of one of the best AI fashions, similar to GPT-4 and Claude-3.5-Sonnet. There’s a cause telephone brands are embedding AI tools into apps just like the Gallery: focusing on extra particular use instances is one of the simplest ways for most individuals to work together with models of various varieties. Forbes reported that Nvidia's market value "fell by about $590 billion Monday, rose by roughly $260 billion Tuesday and dropped $160 billion Wednesday morning." Other tech giants, like Oracle, Microsoft, Alphabet (Google's guardian firm) and ASML (a Dutch chip equipment maker) additionally faced notable losses.

If you cherished this article and also you would like to receive more info regarding ديب سيك مجانا please visit our web page.

댓글목록

등록된 댓글이 없습니다.